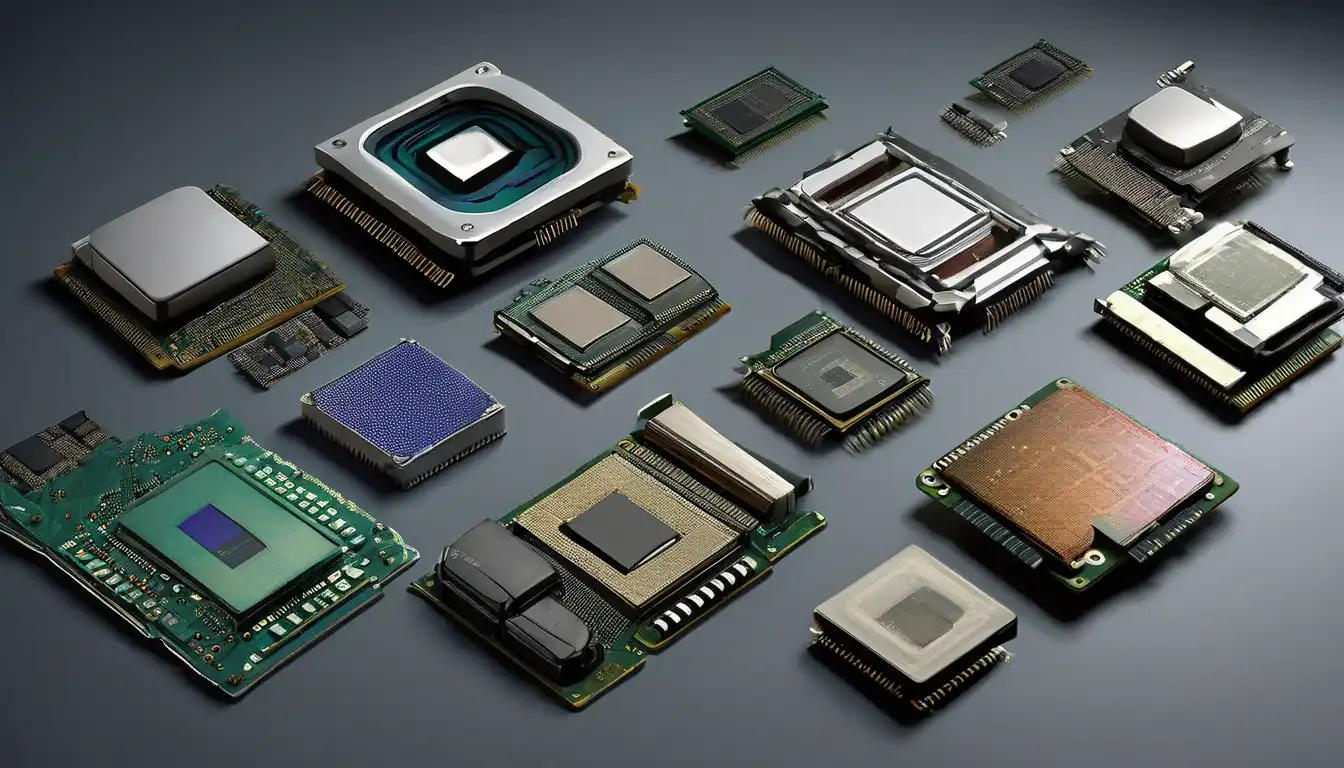

The Dawn of Computing: Early Processor Technologies

The evolution of computer processors represents one of the most remarkable technological journeys in human history. Beginning with primitive vacuum tube systems in the 1940s, processors have undergone revolutionary changes that have fundamentally transformed how we live, work, and communicate. The first electronic computers, such as ENIAC, utilized thousands of vacuum tubes that consumed enormous amounts of power and required constant maintenance. These early processors operated at speeds measured in kilohertz, a far cry from today's gigahertz processors.

During the 1950s, the invention of the transistor marked a pivotal moment in processor evolution. Transistors were smaller, more reliable, and consumed significantly less power than vacuum tubes. This breakthrough led to the development of second-generation computers that were more practical for commercial and scientific applications. The transition from vacuum tubes to transistors set the stage for the integrated circuit revolution that would follow.

The Integrated Circuit Revolution

The 1960s witnessed the birth of the integrated circuit (IC), which allowed multiple transistors to be fabricated on a single silicon chip. Jack Kilby and Robert Noyce independently developed the first working integrated circuits, earning Kilby a Nobel Prize in Physics. This innovation dramatically reduced the size and cost of processors while improving their reliability and performance. Early ICs contained only a few transistors, but they paved the way for increasingly complex designs.

By the late 1960s, manufacturers were producing chips with hundreds of transistors. The development of MOS (Metal-Oxide-Semiconductor) technology further accelerated processor evolution, enabling higher transistor densities and lower power consumption. These advancements made computers more accessible to businesses and research institutions, setting the foundation for the personal computing revolution.

The Microprocessor Era Begins

1971 marked a watershed moment with Intel's introduction of the 4004, the world's first commercially available microprocessor. This 4-bit processor contained 2,300 transistors and operated at 740 kHz. While primitive by today's standards, the 4004 demonstrated that complete central processing units could be manufactured on a single chip. This breakthrough made computers smaller, cheaper, and more energy-efficient.

The success of the 4004 led to rapid innovation throughout the 1970s. Intel followed with the 8-bit 8008 and 8080 processors, while competitors like Motorola and Zilog entered the market with their own designs. The 8080 processor, in particular, became the heart of many early personal computers, including the Altair 8800. These developments made computing power accessible to hobbyists and small businesses, fueling the growth of the personal computer industry.

The x86 Architecture Dominance

Intel's 8086 processor, introduced in 1978, established the x86 architecture that would dominate personal computing for decades. The 16-bit design offered significantly improved performance over previous 8-bit processors. IBM's decision to use the 8088 (a variant of the 8086) in their first personal computer cemented x86's position as the industry standard.

The 1980s saw rapid advancement in x86 processors, with Intel introducing the 80286, 80386, and 80486. Each generation brought substantial improvements in performance, memory addressing, and multimedia capabilities. The 386 introduced 32-bit processing, while the 486 integrated the math coprocessor directly onto the chip. During this period, competitors like AMD began producing x86-compatible processors, creating healthy competition that drove innovation forward.

The Clock Speed Race and Multicore Revolution

The 1990s witnessed an intense focus on increasing processor clock speeds. Intel's Pentium processors pushed frequencies from 60 MHz to over 1 GHz by the decade's end. This "megahertz war" between Intel and AMD led to remarkable performance gains but also created significant heat and power consumption challenges. The pursuit of higher clock speeds eventually hit physical limitations due to heat dissipation and power constraints.

The early 2000s marked a fundamental shift in processor design philosophy. Instead of focusing solely on clock speed increases, manufacturers began adding multiple processor cores to a single chip. Intel's Core 2 Duo and AMD's Athlon 64 X2 processors demonstrated that parallel processing could deliver better performance without requiring massive clock speed increases. This multicore approach became the new paradigm for processor development.

Modern Processor Architectures

Today's processors represent the culmination of decades of innovation. Modern CPUs feature multiple cores, sophisticated cache hierarchies, and advanced power management technologies. Intel's Core i-series and AMD's Ryzen processors incorporate features like hyper-threading, integrated graphics, and AI acceleration. Process nodes have shrunk from micrometers to nanometers, allowing billions of transistors on a single chip.

Recent developments include heterogeneous computing architectures that combine high-performance cores with efficiency cores, as seen in Apple's M-series chips. These designs optimize performance for different workloads while maximizing battery life in mobile devices. The integration of neural processing units (NPUs) directly into processors represents the latest frontier in processor evolution, enabling on-device AI processing without relying on cloud services.

Specialized Processors and Future Directions

The evolution of processors has expanded beyond general-purpose CPUs to include specialized processors optimized for specific tasks. Graphics Processing Units (GPUs) have evolved from simple display controllers to massively parallel processors capable of handling complex computational workloads. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) provide customized processing for specialized applications like cryptocurrency mining and AI inference.

Looking toward the future, several emerging technologies promise to reshape processor evolution once again. Quantum computing represents a fundamentally different approach to processing information, potentially solving problems that are intractable for classical computers. Neuromorphic computing aims to mimic the structure and function of the human brain, offering potentially revolutionary efficiency gains for certain types of computations. Photonic computing, which uses light instead of electricity, could overcome current limitations in speed and power consumption.

Sustainability and Energy Efficiency

As processor technology continues to advance, energy efficiency and sustainability have become critical considerations. Modern processor designs prioritize performance per watt, reducing the environmental impact of computing. Advances in materials science, including the exploration of graphene and other two-dimensional materials, may enable future processors that are both more powerful and more energy-efficient.

The evolution of computer processors has been characterized by exponential growth in performance and capability, following Moore's Law for several decades. While the pace of traditional scaling has slowed, innovation continues through architectural improvements, specialized accelerators, and new computing paradigms. The journey from vacuum tubes to modern multicore processors demonstrates humanity's remarkable capacity for technological innovation, and the future promises even more exciting developments in computing technology.

For more information on related topics, check out our articles on computer architecture fundamentals and emerging computing technologies. Understanding processor evolution provides valuable context for appreciating current technology trends and anticipating future developments in the computing landscape.